Note (April 2025):

This post was originally written in early February, just after DeepSeek’s R1 model sent shockwaves through the AI world. Since then, the model has been downloaded thousands of times, sparked forks and spinoffs, and raised serious questions about the future of proprietary AI. The original post has been lightly updated for clarity and SEO.

Surprise!

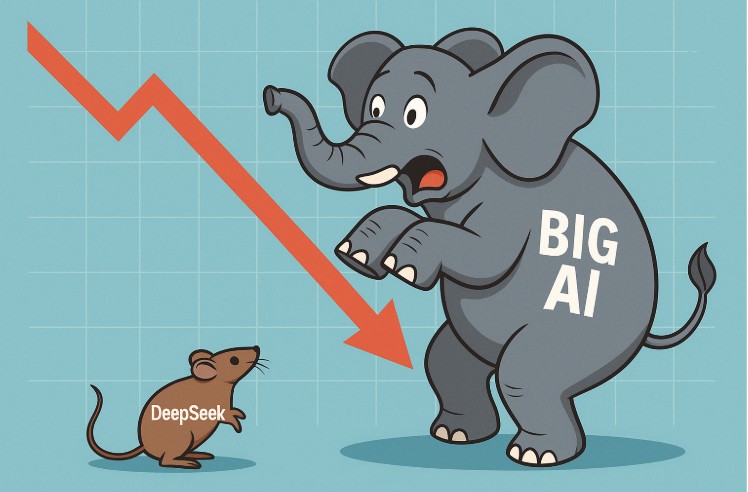

Last Sunday’s market chaos didn’t come out of nowhere. It had been quietly building throughout the week—until it suddenly exploded. The trigger? A little-noticed announcement from a small Chinese firm. That firm, DeepSeek, had just unveiled its R1 large language model, claiming it was built for less than $6 million.

At first, most shrugged. But over the following days, analysts began to dig in. And what they found changed everything.

By the end of the week, prominent news outlets like The Wall Street Journal were sounding alarms with headlines like “China’s DeepSeek AI Sparks U.S. Tech Sell-Off Fears.” The market opened Monday with investors on edge—and by the close, the NADAQ had lost over $1 trillion and Nvidia, the world’s most valuable company, had lost $589 billion in market value in a single day. It was the biggest one-day drop for a company in U.S. stock market history.

Let that sink in: A MIT-licensed, open source model posted on Hugging Face had just upended the most important tech arms race in decades.

Industry Myopia

It’s staggering to think that the world’s most powerful innovation engines—stacked with elite technical talent and billions in R&D—were blindsided by an open-source release.

This wasn’t a stealthy attack on a niche. It happened in arguably the most watched and capital-intensive domain of the last 20 years. People have compared generative AI to the internet, the printing press, even the invention of sliced bread. Yet the industry failed to anticipate this kind of disruption.

The mindset in the AI world had become singular: whoever could hoard the most NVIDIA GPUs and scale up the largest data centers would win. While the major players doubled down on that narrative, a quant research firm in China quietly remixed open models using reinforcement learning, bundled the result under a permissive license, and published it for anyone to use.

And just like that, the rules changed.

Open Source Has Disrupted Before—But Never This Fast

Open source is no stranger to disruption. We’ve seen it again and again with Linux, MySQL, Kubernetes, PyTorch—technologies that slowly but surely redefined their markets.

But those shifts took years.

- Linux: Nearly a decade to gain serious enterprise traction

- MySQL: Several years to replace proprietary databases

- Kubernetes/PyTorch: 4–5 years to reshape containers and machine learning

DeepSeek’s impact? Days.

A Historic Turning Point for AI

I can’t think of the last time an emerging technology blindsided the entire industry and shattered consensus thinking overnight.

Yes, you can argue about DeepSeek’s actual development cost. You can debate the sustainability of open models. You can even pull out Jevons Paradox and talk about GPU demand skyrocketing anyway. But none of that erases this simple fact:

An open-source model triggered a $1 trillion market correction.

Whatever happens next, DeepSeek R1 has already earned a place in the history books—and quite possibly a future HBS case study.

Join the Conversation

Was this a short-term overreaction, or the first real crack in the foundation of proprietary AI? Let me know what you think in the comments.

Pau for now…

Posted by Barton George

Posted by Barton George